Packet forwarding is the invisible force that keeps the digital world connected. Every time you browse a website, send an email, or attend a video call, packets—tiny units of data—are being forwarded across networks to deliver your content. In the simplest sense, packet forwarding is the process of moving these packets from one network device to another until they reach their destination. But beneath this simplicity lies a sophisticated mechanism that ensures data travels efficiently and securely, especially in today’s complex enterprise environments.

Why Should You Care About Packet Forwarding?

If you’re working with networks, packet forwarding is a concept you cannot ignore. It’s at the heart of ensuring reliable communication within and between networks. Poorly configured forwarding leads to slow networks, bottlenecks, and even security vulnerabilities. Understanding how packets find their way through a network enables you to design faster, more resilient, and secure infrastructures. Whether you’re optimizing performance for a data center or simply troubleshooting a slow office network, knowing how forwarding works is essential.

Layer 2 vs. Layer 3 Forwarding – When and Why Each Matters

In smaller networks or within a local environment, devices communicate using Layer 2 forwarding. Think about an office with computers connected to a single switch. Here, devices identify each other by MAC addresses, and the switch is responsible for directing packets internally. This method is fast and efficient for local communication but fails when devices need to talk to other networks.

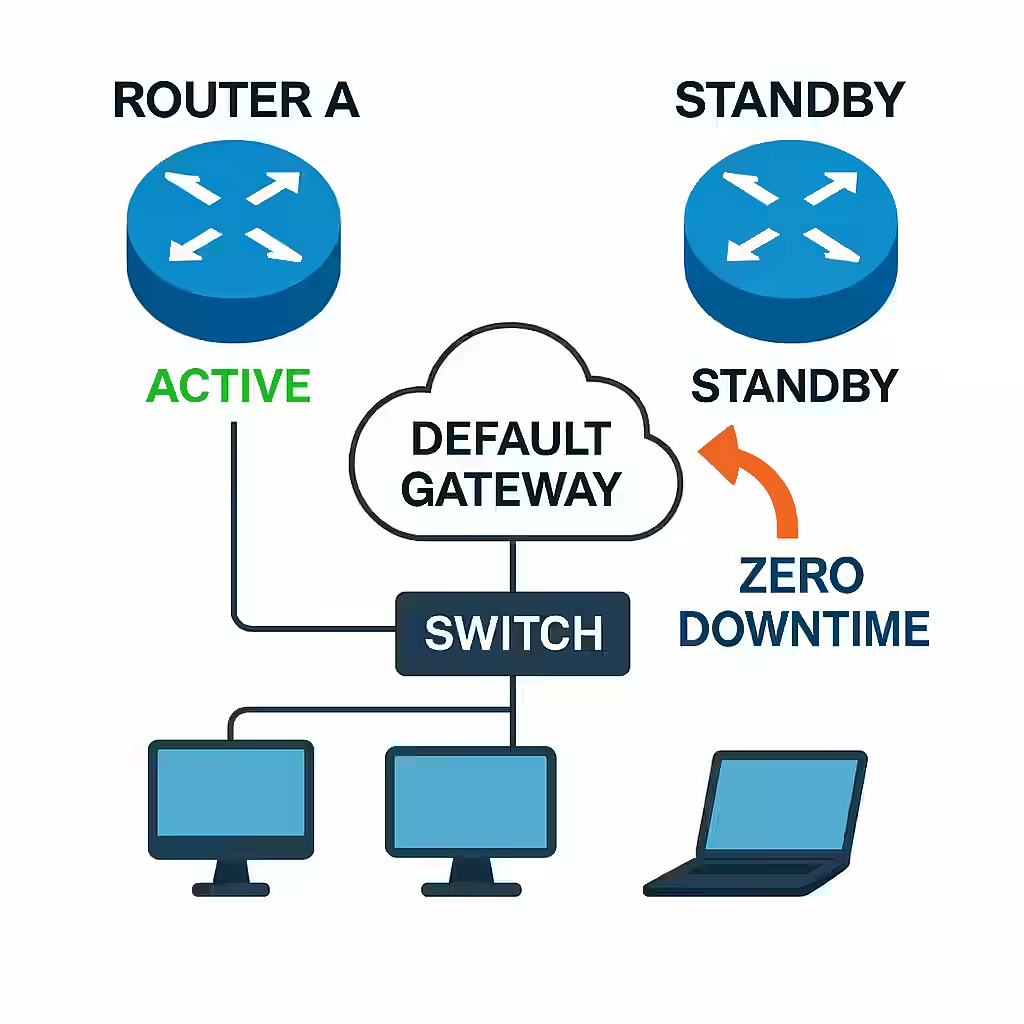

That’s where Layer 3 forwarding, or routing, becomes crucial. When data needs to leave the local network—perhaps to access the internet or reach a server in another branch—routers come into play. These devices work with IP addresses and make decisions based on routing tables, determining the best path for data to travel across networks.

Let’s take a practical example. Imagine a company with separate networks for its Finance and HR departments. Although both departments are in the same building, their networks are segmented for security reasons. A Layer 3 device is needed to allow selective communication between these networks while enforcing access controls. Without this, the Finance department wouldn’t be able to securely access a central accounting application hosted on a different subnet.

How Does Packet Forwarding Actually Happen?

When a device sends data, it first tries to determine if the destination is within its own network. If it is, the switch forwards the packet directly based on the MAC address. If not, the packet is sent to the default gateway—a router—which then decides where to forward it next.

Modern routers and switches rely on advanced techniques like Cisco Express Forwarding (CEF) to make these decisions quickly. CEF uses special data structures called the Forwarding Information Base (FIB) and adjacency tables to make lightning-fast forwarding decisions. This ensures that even in large-scale environments like data centers or cloud infrastructures, packet forwarding happens with minimal delay.

Real-World Impact of Efficient Packet Forwarding

In high-demand environments such as financial institutions, milliseconds of delay can result in massive financial losses. Similarly, in healthcare, the speed and reliability of packet forwarding can directly impact critical services like remote diagnostics and real-time patient monitoring.

From a business perspective, faster packet forwarding means smoother video conferences, quicker access to cloud applications, and better overall user satisfaction. It also means that network hardware is used efficiently, reducing the need for costly upgrades.

Technologies Related to Packet Forwarding

1. Cisco Express Forwarding (CEF)

CEF is the cornerstone of modern Cisco packet forwarding. It addresses the need for faster packet processing by avoiding repetitive lookups in the routing table. Instead, CEF pre-builds two critical data structures:

- FIB (Forwarding Information Base): This contains the best-known routes derived from the routing table.

- Adjacency Table: This stores Layer 2 next-hop information to ensure quick MAC address resolution.

With these tables ready in memory, routers and switches can make forwarding decisions almost instantly. CEF is enabled by default on most modern Cisco platforms and is especially critical in high-speed environments such as data centers and large campuses.

Verification Command:

show cef

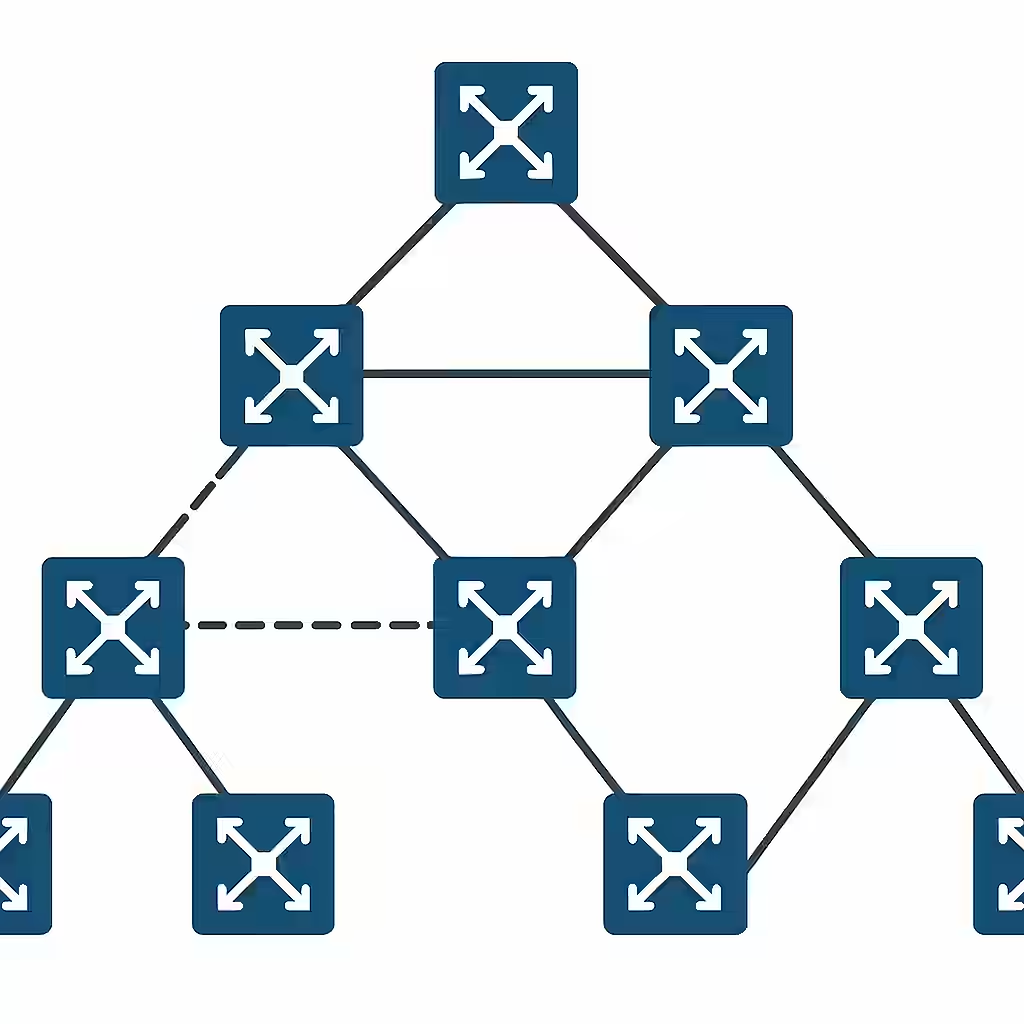

2. Distributed Forwarding (dCEF)

In high-performance devices like the Cisco Catalyst 9500 series or Nexus data center switches, forwarding decisions can be offloaded from the main CPU to dedicated line cards. This is known as distributed forwarding or dCEF.

Each line card maintains its own copy of the FIB and adjacency tables, allowing packets to be processed locally on the line card without burdening the central processor. This is essential for achieving low latency and high throughput in enterprise and service provider networks.

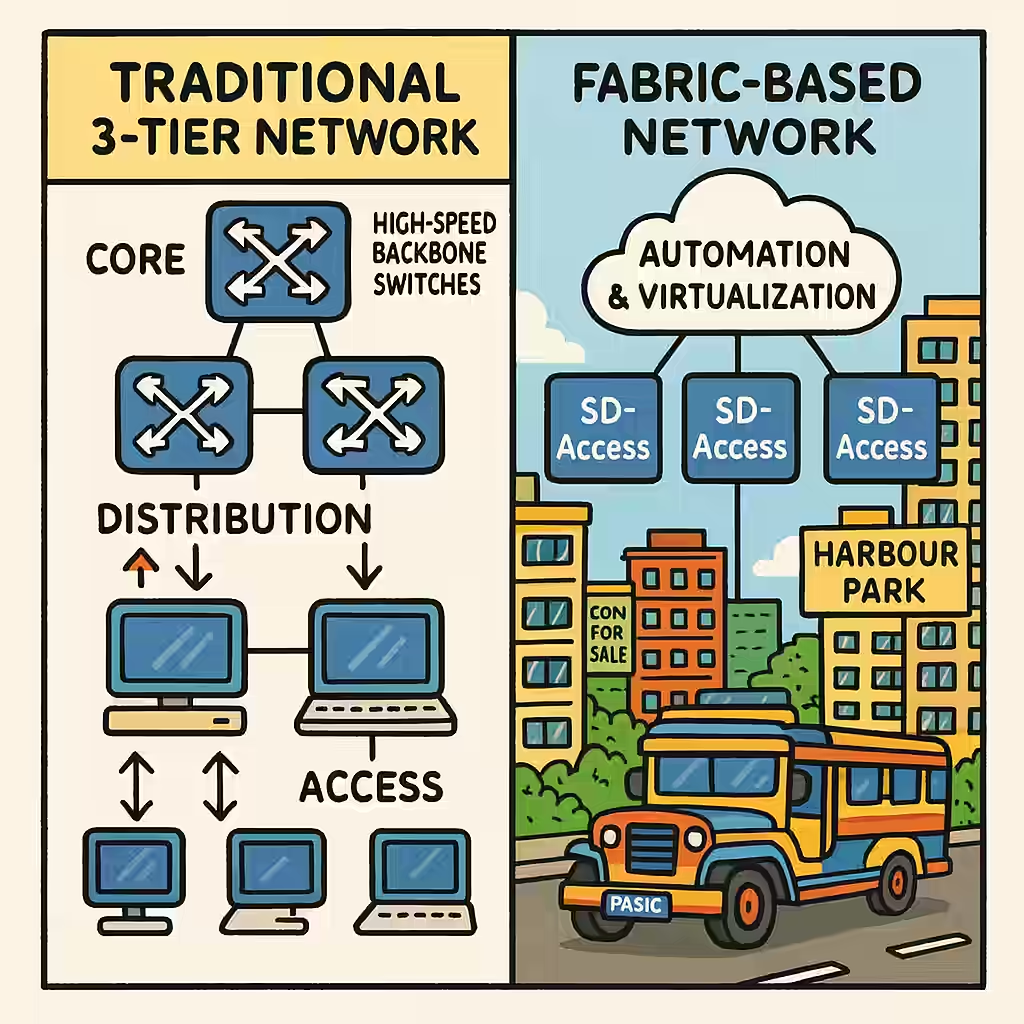

3. Software-Defined Access (SD-Access)

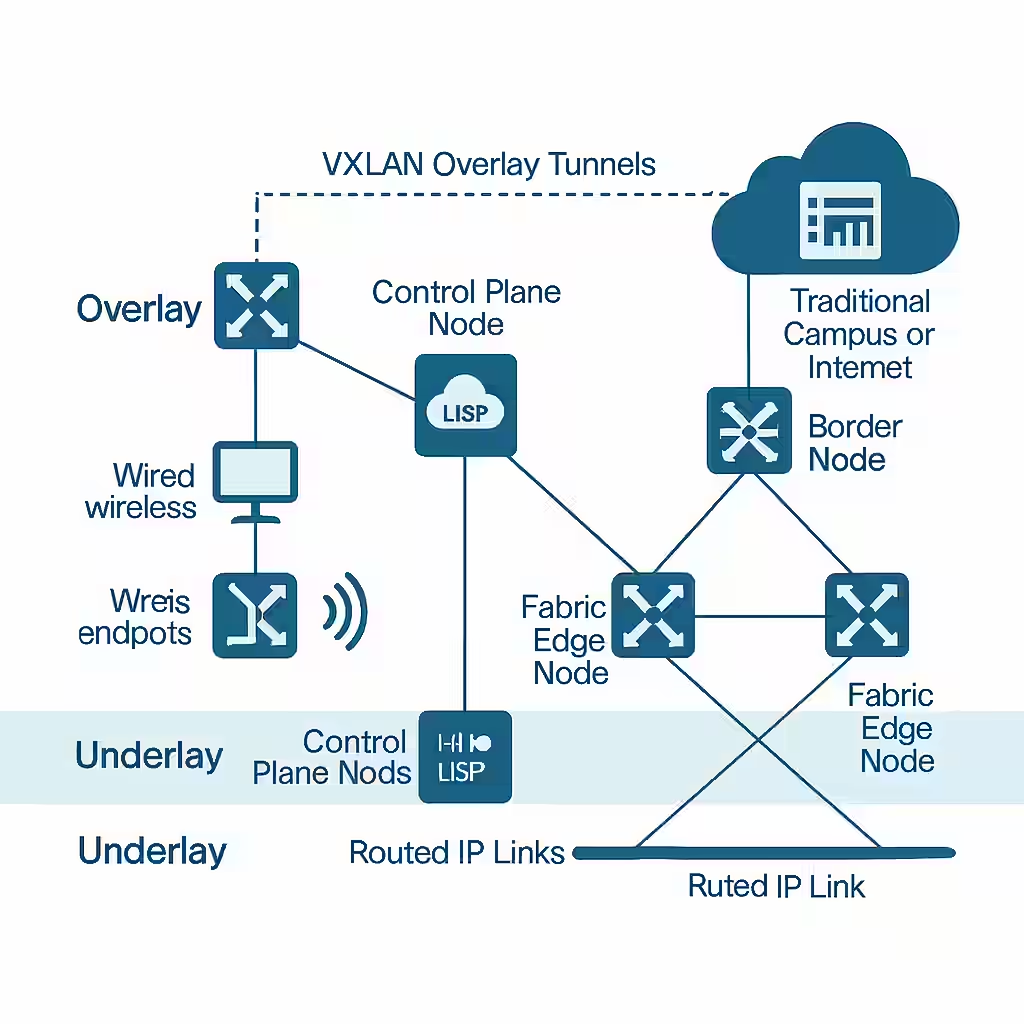

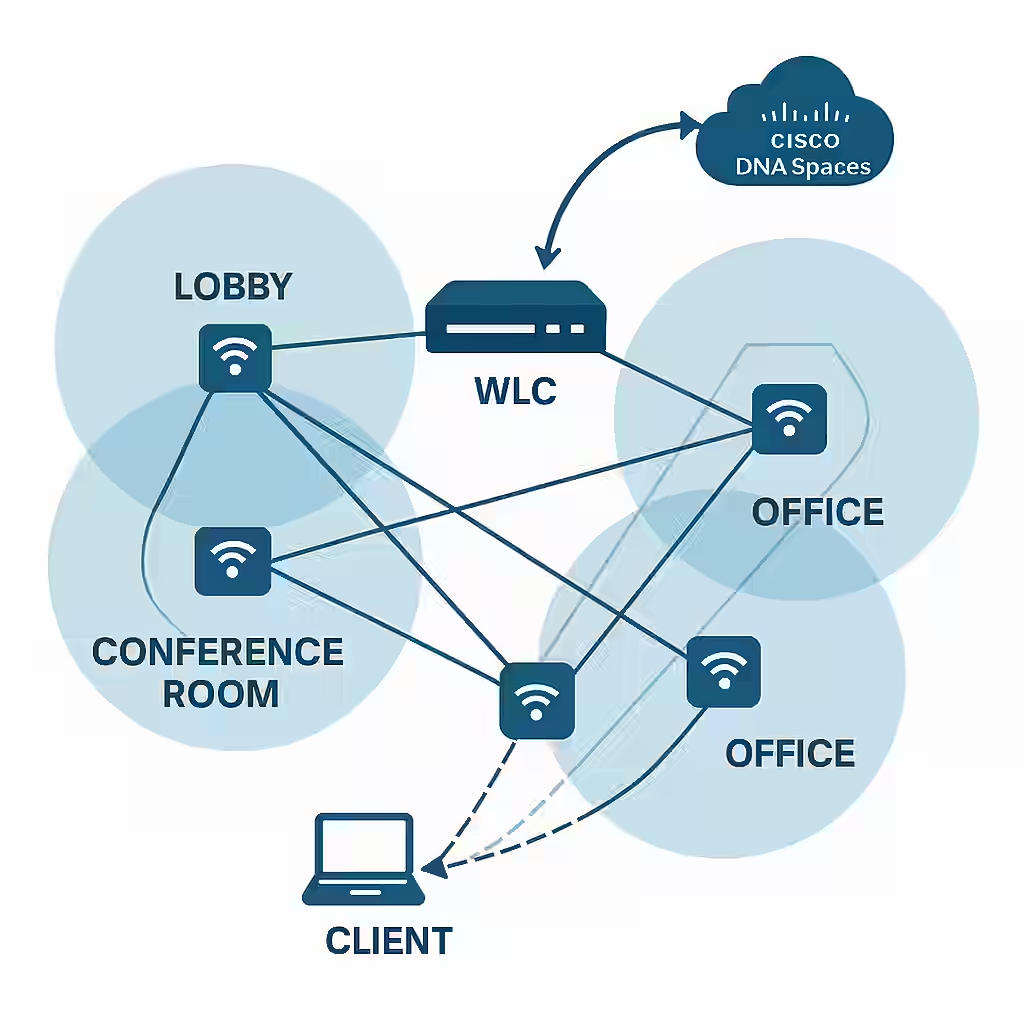

Cisco SD-Access introduces a modern way of handling packet forwarding through fabric-based architectures. Using the LISP (Locator/ID Separation Protocol), it separates device identity from location, allowing seamless mobility and simplified policy enforcement.

In SD-Access environments, forwarding decisions aren’t made solely by analyzing IP and MAC addresses. Instead, they consider user identity, device type, and security policies, which are centrally managed by Cisco DNA Center.

Key Technologies Used:

- LISP for identity-based routing.

- VXLAN for network virtualization and encapsulating traffic between fabric nodes.

4. Virtual Extensible LAN (VXLAN)

VXLAN is a technology that supports large-scale Layer 2 networks over a Layer 3 infrastructure. In packet forwarding, VXLAN plays a key role in environments requiring network virtualization—common in data centers and cloud networks.

Cisco devices like the Nexus 9000 series utilize VXLAN to encapsulate Layer 2 frames within Layer 3 packets, allowing for scalable and efficient forwarding across distributed environments.

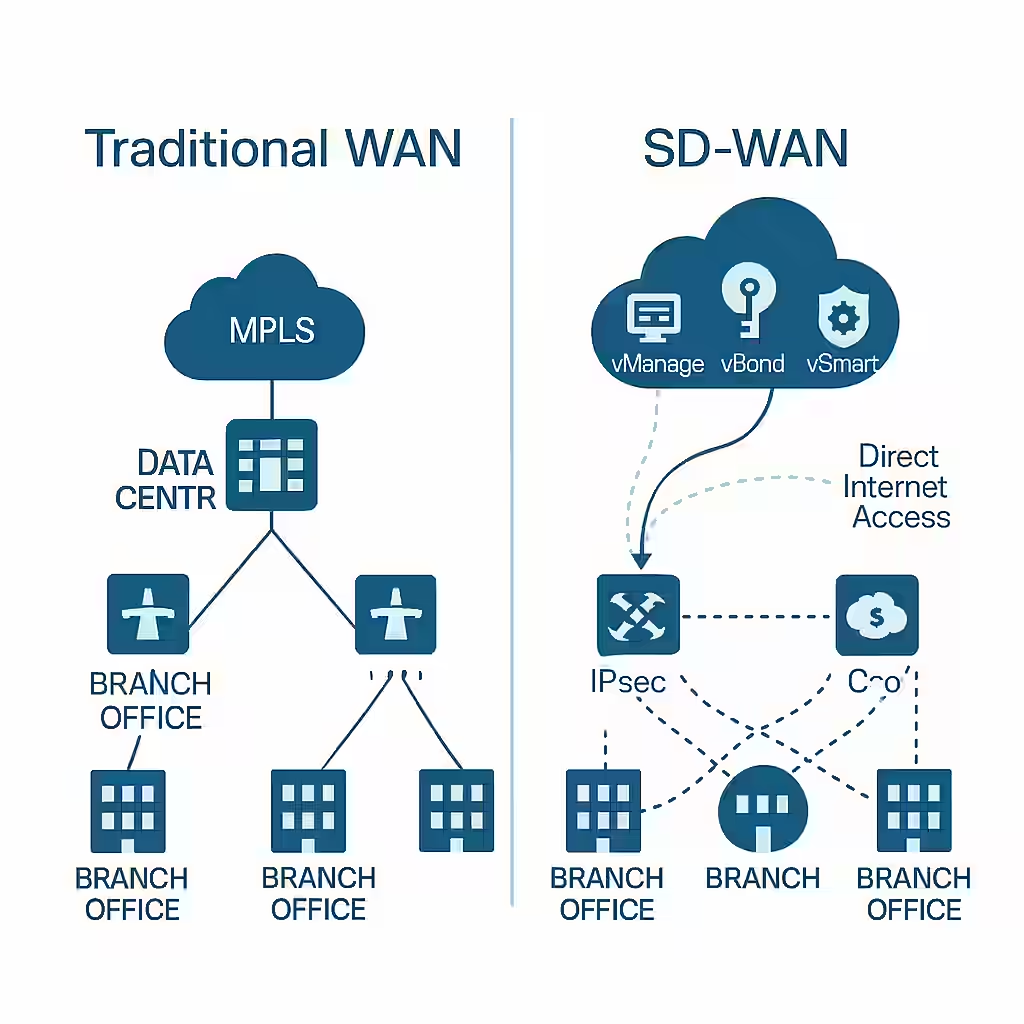

5. Cisco SD-WAN (Software-Defined WAN)

In WAN environments, Cisco’s SD-WAN solution changes how packets are forwarded between branch offices, data centers, and cloud services. Instead of static routing decisions, SD-WAN uses policies defined by business intent.

The SD-WAN fabric dynamically chooses the best path for packets based on real-time conditions like latency, jitter, and packet loss. It uses intelligent controllers (vSmart and vBond) to enforce these decisions, improving application performance over less expensive internet circuits.

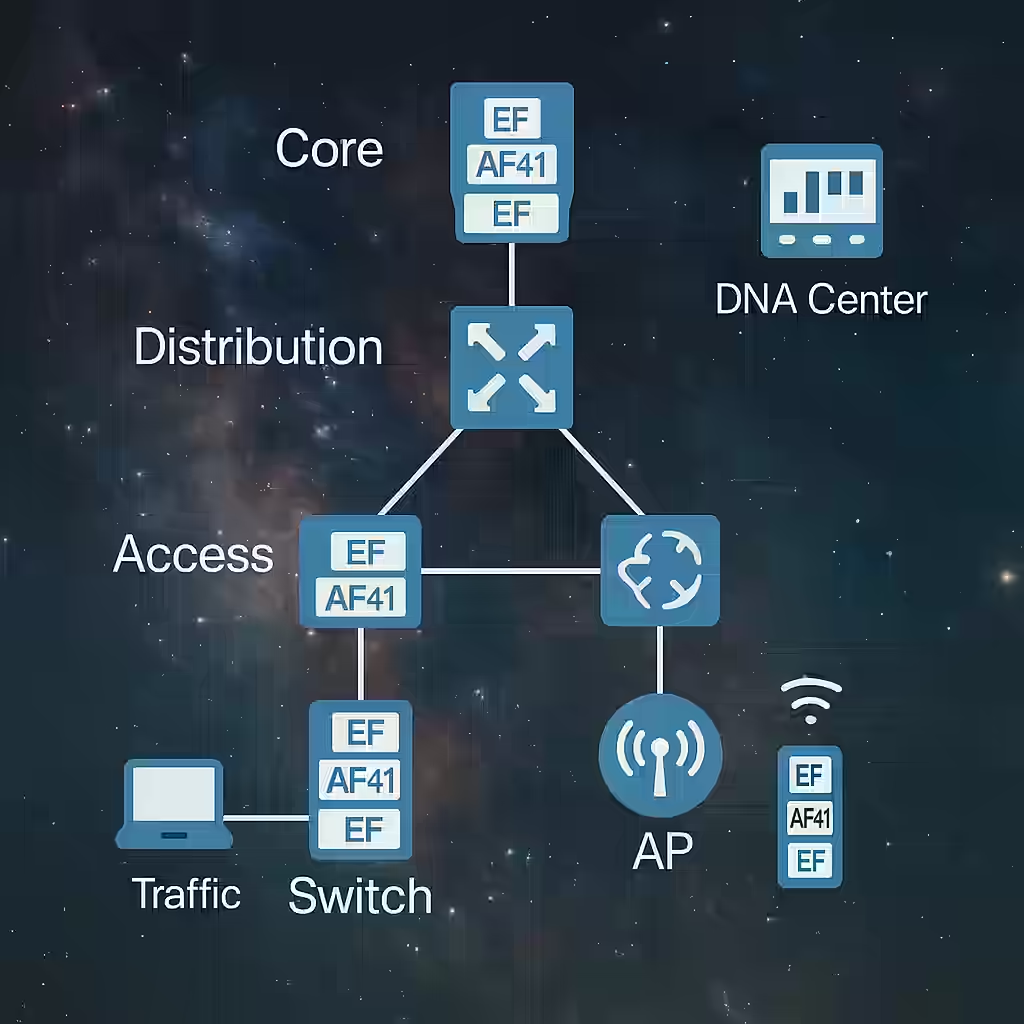

6. Quality of Service (QoS) in Forwarding

Cisco integrates QoS mechanisms directly into the packet forwarding process. When forwarding packets, especially in congested environments, devices prioritize traffic based on QoS policies. This ensures critical applications such as VoIP and video conferencing receive the necessary bandwidth and low latency, even under heavy network load.

Verification Command:

show policy-map interface

7. Forwarding Hardware Acceleration (ASICs)

Cisco’s custom-designed Application-Specific Integrated Circuits (ASICs), like the UADP (Unified Access Data Plane) and Cisco Silicon One, are purpose-built to accelerate packet forwarding at the hardware level. These chips allow for massive packet throughput without relying on software-based decision-making, making them ideal for core routers and high-performance switches.

Conclusion

Packet forwarding is more than a background process; it’s the backbone of network communication. Whether you’re a network engineer, a system administrator, or simply someone curious about how networks operate, understanding packet forwarding helps you see the bigger picture of how devices communicate, how traffic is controlled, and how performance is optimized.